PROCESSOR_ARCHITECTURE and Visual Studio Debugging

For years I’ve labored under the impression that the PROCESSOR_ARCHITECTURE environment variable was an indication of the bitness of the current process. Even after comprehending that in a 64-bit process this variable yields AMD64, even when the machine is fitted with an Intel processor (because AMD invented the 64-bit extensions), I’ve written several components in the past that rely on PROCESSOR_ARCHITECTURE, and never expended too much thought about it. I mean, this has to be the simplest and most reliable way to do it, right?

However, a colleague recently asked me if I had any idea why he couldn’t debug an assembly in Visual Studio. He was getting a BadImageFormatException, a surefire indicator that something was trying to load a 64-bit binary into a 32-bit process (or vice-versa). The code he was trying to debug in this case was an add-in to Excel, so he’d configured the project in Visual Studio to “Start external program”, and pointed to Excel, but Excel wouldn’t start when hitting F5, and the logs revealed the exception. Starting Excel directly with the add-in configured worked just fine.

As it turned out, we were using PROCESSOR_ARCHITECTURE in this assembly to determine which version to load (x86 or x64) of a lower-level native dependency. In production, this would work just fine, but when debugging from Visual Studio, the x64 version of the native component was being loaded, which was not making the x86 Excel process very happy at all.

To simplify the diagnosis, I created a new console application in Visual Studio, set the project to debug into C:\Windows\SYSWOW64\cmd.exe (the 32-bit command prompt), and hit F5. Sure enough, from the resulting shell, echo %PROCESSOR_ARCHITECTURE% was yielding AMD64, in a 32-bit process! Something was clearly not quite right with this picture.

As it transpires, when debugging this way in Visual Studio, the external program starts in an environment that’s configured for the wider of the bitnesses of the startup project’s current build platform and the started executable. In my simple console app, if I set the platform to x86 instead of the default Any CPU, the resulting command prompt now yielded x86 instead of AMD64 for PROCESSOR_ARCHITECTURE. But if I instead debugged into C:\Windows\System32\cmd.exe, PROCESSOR_ARCHITECTURE yielded AMD64 regardless of the project’s current build platform.

Since the code in question here was in a managed assembly, I switched the PROCESSOR_ARCHITECTURE check to instead consider the value of IntPtr.Size (4 indicates x86, 8 indicates x64). Hopefully when we go to .NET 4.0 we’ll be able to take advantage of Environment.Is64BitProcess and Environment.Is64BitOperatingSystem for this kind of thing, at least from managed code.

Windows Home Server RIP?

I’ve been running an HP MediaSmart Windows Home Server for a while now, and I’ve actually found it quite useful. There are many PCs in my house (laptops, desktops, home theater PC and media center), and they’ve all been hooked up to the home server, saving me a bunch of pain and hassle.

The PCs back up every day – the whole drive. I can restore a PC from bare metal very quickly (I’ve used this feature on two occasions when hard drives have died). I’m told that this integrates with Time Machine on the Mac, too, but I don’t have a Mac.

Anti-virus on all PCs and the server stays up-to-date automatically thanks to the Avast! anti-virus suite, which has a version specifically for WHS.

The server itself hosts network shares for user files that are duplicated across multiple physical drives in the server.

Adding and removing drives on-the-fly is simple.

WHS has a plugin model that allows me to add Amazon S3 offsite storage as an additional backup for the shares.

I have a ton of media stored on the server, and it’s all streamable to the PCs and a few XBox 360 consoles (in Media Center Extender mode).

All of this is available remotely when I’m on the road via a secure web site.

Generally, I think this is one of the best products Microsoft has ever shipped, but several things have happened recently to make me think that the ride is over.

First, Microsoft have decided to kill the Drive Extender technology that’s at the heart of the storage and redundancy engine of WHS. This technology has a checkered history – it was destined to become the storage mechanism for all Windows Server versions, but exhibited some nasty bugs early on in life that caused data corruption. Those problems were fixed, and in my experience the technology has been very solid since then, but apparently more problems appeared during testing with several corporate server software products, and Microsoft has decided to take a different path. It looks like the next version of WHS will have to interoperate with a Drobo-like hardware solution instead.

Second, I was recently “volunteered” to give an internal presentation on Windows 7 Explorer, and during my research on this, I discovered that the new Libraries system in Windows 7 doesn’t quite work fully with Windows Home Server. Specifically, a Documents library, when asked to “Arrange by Type”, doesn’t include library locations on a Windows Home Server. The location is indexed (WHS Power Pack 3 is installed, Windows Search is installed and running), but the files on the WHS location simply don’t show up in “Arrange by Type” view (and, curiously, only this view, it seems). Indexed files on a traditional network share on a standard Windows Server 2003 show up just fine in this library view, so I think this is a WHS problem.

Third, I also noticed that files on a regular network share that I’d deleted months ago still appeared in the library in some view modes, but if I try to open them I get a “File Not Found” error. This appears to be a problem with Windows 7 Libraries, to be fair to WHS.

All of this fails to give me a warm and fuzzy feeling about the future of Windows Home Server.

Extending the Gallio Automation Platform

I’ve been doing a lot recently with Gallio, and I have to say I like it. Gallio is an open source project that bills itself as an automation framework, but its most compelling use by far is as a unit testing platform. It supports every unit test framework that I’ve ever heard of (including the test framework that spawned it, MbUnit, which is now another extension to Gallio), and has great tooling hooks for the flexibility to run tests from many different environments.

Gallio came onto my radar when my company had to choose whether to enhance or replace an in-house testing platform which was written back in the days when the unit testing tools available were not all that sophisticated. The application had evolved to become quite feature-rich, but the user interfaces (a Windows Forms application and a console variant), needed a makeover, and there were two schools of thought: invest resources in further enhancing and maintaining an internal tool, or revisit the current external options available to us.

We use NHibernate as a persistence layer, and have been able to benefit greatly from taking advantage of the improvements made to that open-source product, so I was keen to see if we could lean on the wider community again for our new unit testing framework.

I’d had a fair amount of experience with MSTest in a former life, but quickly dismissed it as an option when comparing it against the feature sets available in the latest versions of NUnit and MbUnit. Our in-house framework was clearly influenced by an early version of NUnit, and made significant use of parameterized tests, many of which were quite complex. It seemed that data-driven testing in MSTest hadn’t really progressed beyond the ADO.NET DataSource, whereas frameworks like MbUnit have started to provide quite powerful, generative capabilities for parameterizing test fixtures and test cases.

So one option on the table was to port (standardize, in reality) all of our existing tests to NUnit or MbUnit tests. This would allow us to run the tests in a number of ways, instead of just via the two tools we had built around our own framework. With my developer hat on, I really like the ability to run one or more tests directly from Visual Studio in ReSharper, and for the buildmaster in me, running and tracking those same tests from a continuous integration server like TeamCity is also important. We had neither of these capabilities with our existing platform.

Another factor in our deliberations became the feature set of the UI for our in-house tool. We had some feature requirements here that we would have to take with us going forward, so if we weren’t going to continue to maintain ours, we needed to find a replacement test runner UI that could be extended.

Enter Icarus, which itself is another extension to Gallio that provides a great Windows Forms UI that, all by itself, does everything you might need a standard test runner application to do, but that’s only the beginning. Adding your own functionality to the UI is actually quite easy to achieve. I was able to add an entire test pane with just a handful of source files and few dozen lines of code, and we were up and running with a couple of core features we needed from our new test runner (and this was an ElementHost-ed WPF panel, at that).

And that’s not the end of the story. Even if we wanted to use Gallio/Icarus going forward, we were still faced with the prospect of porting all of our existing unit tests to one of the many supported by Gallio (with NUnit and MbUnit being the two favourites). We really didn’t want to do this, and would probably have lived with a bifurcated testing architecture where the existing tests would have stayed with our internal framework and any new tests would be built for NUnit or MbUnit. This would have been less that ideal, but it probably would still have been worthwhile in order to avoid maintaining our own tools while watching the third party tools advance without us.

As it turns out, we didn’t need to make that choice, because adding a whole new test runner framework to Gallio is as easy as extending the Icarus UI. By shamelessly cribbing from the existing Gallio adapters for NUnit and MbUnit, we were able to reuse significant parts of our in-house framework, build a new custom adapter around those, and run all of our existing unit tests alongside new NUnit tests in both the Icarus UI and the Gallio Echo command-line test runners. As an added bonus, since Gallio is also supported by ReSharper, we were now able to run our old tests directly from within Visual Studio, for free, something we had not been able to do with our platform. It took about two days to complete all of the custom adapter work.

I’m quite optimistic that we’ll be able to really enhance our unit testing practices by leveraging Gallio, and without the effort it would take to maintain a lot of complex internal code. The extensibility of Gallio and Icarus is really quite phenomenal – kudos to all those responsible.

Did the Entity Framework team do this by design?

I’ve been playing around quite a bit recently with Entity Framework, in the context of a WCF RIA Services Silverlight application, and just today stumbled upon a quite elegant solution to a performance issue I had feared would not be easy to solve without writing a bunch of code.

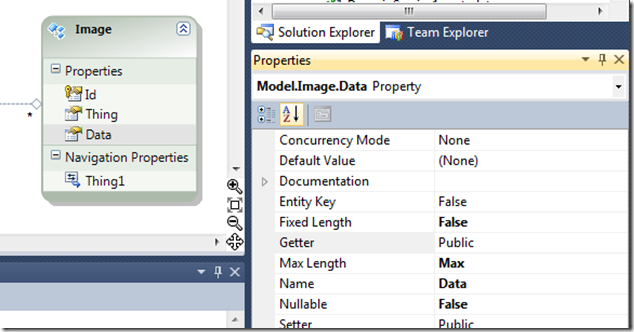

The application in question is based around a SQL Server database which contains a large number of high-resolution images stored in binary form, which are associated with other entities via a foreign key relationship, like this:

Pretty straightforward. Each Thing can have zero or many Image entities associated with it. Now, lets say we want to present a list of Thing entities to the user. With a standard WCF RIA Services domain service, we might implement the query method like this:

public IQueryable<Thing> GetThings() {

return ObjectContext.Things.Include("Images").OrderBy(t => t.Title);

}

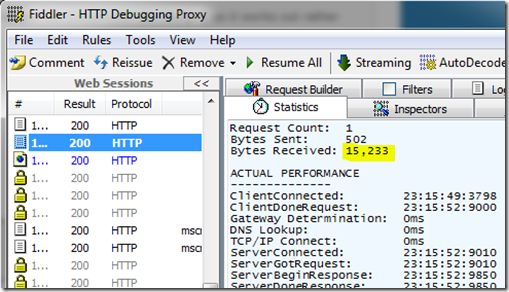

Unfortunately, this query will perform quite poorly if there are many Things referencing many large Images, because all the Images for all the Things will cross the wire down to the client. When I try this for a database containing a single Thing with four low-resolution Images, Fiddler says the following about the query:

If we had a large number of Thing entities, and the user never navigates to those entities to view their images, we’d be transferring a lot of images in order to simply discard them, unviewed. If we leave out the Include(“Images”) extension from the query, we won’t transfer the image data, but also the client will not be aware that there are in fact any Images associated with the Things, and we’d have to make subsequent queries back to the service to retrieve the image data separately.

What we’d like to be able to do is include a collection of the image Ids in the query results that go to the client, but leave out the actual image bytes. Then, we can write a simple HttpHandler that’s capable of pulling a single image out of the database and serving it up as an image resource. At the same time we can also instruct the browser to cache these image resources, which will even further reduce our bandwidth consumption. Here’s what that handler might look like:

public class ImageHandler : IHttpHandler {

#region IHttpHandler Members

public bool IsReusable {

get { return true; }

}

public void ProcessRequest(HttpContext context) {

Int32 id;

if (context.Request.QueryString["id"] != null) {

id = Convert.ToInt32(context.Request.QueryString["id"]);

}

else {

throw new ArgumentException("No id specified");

}

using (Bitmap bmp = ConvertToBitmap(GetImageBytes(id))) {

context.Response.Cache.SetValidUntilExpires(true);

context.Response.Cache.SetExpires(DateTime.Now.AddMonths(1));

context.Response.Cache.SetCacheability(HttpCacheability.Public);

bmp.Save(context.Response.OutputStream, ImageFormat.Jpeg);

bmp.Dispose();

}

}

#endregion

private Bitmap ConvertToBitmap(byte[] bmp) {

if (bmp != null) {

TypeConverter tc = TypeDescriptor.GetConverter(typeof(Bitmap));

var b = (Bitmap) tc.ConvertFrom(bmp);

return b;

}

return null;

}

private byte[] GetImageBytes(Int32 id) {

var entities = new Entities();

return entities.Images.Single(i => i.Id == id).Data;

}

}

It seems like there’s no clean way to achieve what we want, but in fact there is. If you look at the properties available to you on the Data property you can see that the accessibility of these properties can be changed:

By default entity properties are Public, and RIA Services will dutifully serialize Public properties for us. But if we change the accessibility to Internal, RIA Services chooses not to do so, which make sense. Since the property is Internal, it’s still visible to every class in the same assembly. Therefore, as long as our ImageHandler is part of the same project/application as the entity model and the domain service, it will still have access to the image bytes via Entity Framework, and the code above will work unmodified.

After making this small change in the property editor (and regenerating the domain service), on the client side we no longer see a byte[] as a member of the Image entity:

When I now run my single-entity-with-four-images example, Fiddler gives us a much better result:

The Big Bang Development Model

Last summer, on the first hot day we had (there weren’t that many hot days last year in New York), I turned on my air conditioner to find that although the outdoor compressor unit and the indoor air handler both appeared to be working (fans spinning), there was no cold air to be felt anywhere in the house. We bought the house about three years ago, and at that time the outdoor unit was relatively new (maybe five years old). In the non-summer seasons we've spent in the house, we've always made sure keep the compressor covered up so that rain, leaves and critters don't foul up the works, and I've even opened it up a couple of times to oil the fan and generally clean out whatever crud did accumulate in there, so I was surprised that the thing didn't last longer than eight years or so.

When the HVAC engineer came to check it out, he found that the local fuse that was installed inline with the compressor was not correctly rated (the original installer had chosen a 60A fuse; the compressor was rated at 40A), and that the compressor circuitry had burned out as a result. For the sake of the correct $5 part eight years ago, a new $4000 compressor was now required.

So this winter, when I turned up the thermostat on the first cold day and there was no heat, I readied the checkbook again. This furnace was the original equipment installed when the house was built in the ‘60s, so I felt sure that I’d need a replacement furnace. I was pleasantly surprised when this time the HVAC guy told me that a simple inexpensive part needed to be replaced (the flame sensor that shuts off the gas if the pilot light goes out). This was a great, modular design for a device that ensured that a full refit or replacement wasn’t required when a single component failed.

It occurred to me that I'd seen analogs of these two stories play out on software projects I've been involved with over the years. A single expedient choice (or a confluence of several such choices), each seemingly innocuous at the time, can turn into monstrous, expensive maintenance nightmares. Ward Cunningham originally equated this effect with that of (technical) debt [http://en.wikipedia.org/wiki/Technical_debt].

What makes the situation worse for software projects over the air conditioner analogy is that continuous change over the life of a software project offers more and more opportunity for such bad choices, and each change becomes more and more expensive as the system becomes more brittle, until all change becomes prohibitively expensive and the system is mothballed, its replacement is commissioned and a whole new expensive development project is begun. I think of this as a kind of Big Bang Development Model, and in my experience this has been the standard model for the finance industry.

For an in-house development shop, an argument can be made that this might not be so bad, although I wouldn't be one to make it – it’s success is highly dependent on your ability to retain the staff that “know where the bodies are buried”, which in turn is directly proportional to remuneration. If you're a vendor, Big Bang should not be an option - you need to hope that there's no other vendor waiting in the wings when your Big Bang happens.

Of course, today we try to mitigate the impact of bad choices with a combination of unit testing, iterative refactoring and abstraction, but all of this requires management vision, discipline, good governance and three or four of the right people (as opposed to several dozens or hundreds of the wrong ones). Those modern software engineering tools are also co-dependent: effective unit testing requires appropriate abstraction; fearless refactoring requires broad, automated testing; sensible abstractions can usually be refactored more easily than inappropriate ones when the need arises to improve them.

I'm not going to "refactor" my new $4000 compressor, but you can bet that I am going to use a $10 cover and a $5 can of oil.

Windows Mobile got me fired (or at least it could have done)

After resisting getting a so-called “smart”-phone for the longest time, making do with a pay-as-you-go Virgin Mobile phone (I wasn’t a heavy user), I recently stumped up for the T-Mobile HTC Touch Pro2. I was going to be traveling in Europe, and I also wanted to explore writing an app or two for the device (if I can ever find the time), so I convinced myself that the expense was justified. After three months or so, I think I should have gone for the iPhone 3GS.

Coincidentally, the week I got the HTC, my old alarm clock (a cheap Samsonite travel alarm) bit the dust, and so I started using the phone as a replacement (it was on the nightstand anyway, and the screen slides out and tilts up nicely as a display). I also picked up a wall charger so that it could charge overnight and I wouldn’t risk it running out of juice and not waking me up.

Over the last few weeks, however, the timekeeping on the device has started to become erratic. First it would lose time over the course of a day, to the tune of about 15 minutes or so. Then I’d notice that when I’d set it down and plug it in for the night, the time would just suddenly jump back by several hours, and I’d have to reset it. I guess this must have happened four or five times over the course of a couple of months, but it always woke me up on time.

Today I woke up at 8.30am to bright sunshine, my phone proclaiming it to be 4.15am. My 6.00am alarm clearly useless; and I’m glad the 8am call I had scheduled wasn’t mandatory on my part.

A pretty gnarly bug for a business-oriented smart-phone.

Discount/Zero Curve Construction in F# – Part 4 (Core Math)

All that’s left to cover in this simplistic tour of discount curve construction is to fill in the implementations of the core mathematics underpinning the bootstrapping process.

computeDf simply calculates the next discount factor based on a previously computed one:

let computeDf fromDf toQuote =

let dpDate, dpFactor = fromDf

let qDate, qValue = toQuote

(qDate, dpFactor * (1.0 /

(1.0 + qValue * dayCountFraction { startDate = dpDate;

endDate = qDate })))

let dayCountFraction period = double (period.endDate - period.startDate).Days / 360.0

findDf looks up a discount factor on the curve, for a given date, interpolating if necessary. Again, here tail recursion and pattern matching make this relatively clean:

let rec findDf interpolate sampleDate =

function

// exact match

(dpDate:Date, dpFactor:double) :: tail

when dpDate = sampleDate

-> dpFactor

// falls between two points - interpolate

| (highDate:Date, highFactor:double) :: (lowDate:Date, lowFactor:double) :: tail

when lowDate < sampleDate && sampleDate < highDate

-> interpolate sampleDate (highDate, highFactor) (lowDate, lowFactor)

// recurse

| head :: tail

-> findDf interpolate sampleDate tail

// falls outside the curve

| []

-> failwith "Outside the bounds of the discount curve"

logarithmic does logarithmic interpolation for a date that falls between two points on the discount curve. This function is passed by value as the interpolate parameter to findDf above:

let logarithmic (sampleDate:Date) highDp lowDp =

let (lowDate:Date), lowFactor = lowDp

let (highDate:Date), highFactor = highDp

lowFactor * ((highFactor / lowFactor) **

(double (sampleDate - lowDate).Days / double (highDate - lowDate).Days))

let newton f df (guess:double) = guess - f guess / df guess

let rec solveNewton f df accuracy guess =

let root = (newton f df guess)

if abs(root - guess) < accuracy then root else solveNewton f df accuracy root

let deriv f x =

let dx = (x + max (1e-6 * x) 1e-12)

let fv = f x

let dfv = f dx

if (dx <= x) then

(dfv - fv) / 1e-12

else

(dfv - fv) / (dx - x)

let computeSwapDf dayCount spotDate swapQuote discountCurve swapSchedule (guessDf:double) =

let qDate, qQuote = swapQuote

let guessDiscountCurve = (qDate, guessDf) :: discountCurve

let spotDf = findDf logarithmic spotDate discountCurve

let swapDf = findPeriodDf { startDate = spotDate; endDate = qDate } guessDiscountCurve

let swapVal =

let rec _computeSwapDf a spotDate qQuote guessDiscountCurve =

function

swapPeriod :: tail ->

let couponDf = findPeriodDf { startDate = spotDate; endDate = swapPeriod.endDate } guessDiscountCurve

_computeSwapDf (couponDf * (dayCount swapPeriod) * qQuote + a) spotDate qQuote guessDiscountCurve tail

| [] -> a

_computeSwapDf -1.0 spotDate qQuote guessDiscountCurve swapSchedule

spotDf * (swapVal + swapDf)

let zeroCouponRates = discs

|> Seq.map (fun (d, f)

-> (d, 100.0 * -log(f) * 365.0 / double (d - curveDate).Days))

Discount/Zero Curve Construction in F# – Part 3 (Bootstrapping)

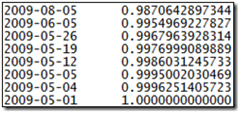

In Part 1, the first lines of code we saw formed the final step in the construction of the discount curve:

let discs = [ (curveDate, 1.0) ]

|> bootstrap spotPoints

|> bootstrapCash spotDate cashPoints

|> bootstrapFutures futuresStartDate futuresPoints

|> bootstrapSwaps spotDate USD swapPoints

|> Seq.sortBy (fun (qDate, _) -> qDate)

The curve begins with today’s discount factor of 1.0 in a one-element list, which is passed to the bootstrap function along with the spot quotes.

let rec bootstrap quotes discountCurve =

match quotes with

quote :: tail ->

let newDf = computeDf (List.hd discountCurve) quote

bootstrap tail (newDf :: discountCurve)

| [] -> discountCurve

This tail recursion works through the given quotes, computing the discount factor for each based on the previous discount factor in the curve (the details of this we’ll cover in Part 4), and places that new discount factor at the head of a new curve, that is used to continue the recursion. When there are no more quotes, the discount curve is returned, ending the recursion. After bootstrapping the spot quotes, the curve looks like this:

Cash quotes are bootstrapped with respect to the spot discount factor, rather than their previous cash point:

let rec bootstrapCash spotDate quotes discountCurve =

match quotes with

quote :: tail ->

let spotDf = (spotDate, findDf logarithmic spotDate discountCurve)

let newDf = computeDf spotDf quote

bootstrapCash spotDate tail (newDf :: discountCurve)

| [] -> discountCurve

Once again, the futures offer a twist, albeit a minor one this time:

let bootstrapFutures futuresStartDate quotes discountCurve =

match futuresStartDate with

| Some d ->

bootstrap (Seq.to_list quotes)

((d, findDf logarithmic d discountCurve) :: discountCurve)

| None -> discountCurve

Note that these aren’t in date order at this point, since we bootstrapped from the futures start date of Jun 17th, but that’s OK for our purposes – it won’t affect the accuracy of the final curve once we sort it chronologically.

Now to the swaps -- this time it’s the swaps that complicate the procedure. With the assumption that the swap quotes are fair market rates (present value zero), we use a root solver (in this case Newton’s method) to find the discount factor of each swap, pricing them using the curve we’ve built thus far:

let rec bootstrapSwaps spotDate calendar swapQuotes discountCurve =

match swapQuotes with

(qDate, qQuote) :: tail ->

// build the schedule for this swap

let swapDates = schedule semiAnnual { startDate = spotDate; endDate = qDate }

let rolledSwapDates = Seq.map (fun (d:Date) -> roll RollRule.Following calendar d)

swapDates

let swapPeriods = Seq.to_list (Seq.map (fun (s, e) -> { startDate = s; endDate = e })

(Seq.pairwise rolledSwapDates))

// solve

let accuracy = 1e-12

let spotFactor = findDf logarithmic spotDate discountCurve

let f = computeSwapDf dayCount spotDate (qDate, qQuote) discountCurve swapPeriods

let newDf = solveNewton f accuracy spotFactor

bootstrapSwaps spotDate calendar tail ((qDate, newDf) :: discountCurve)

| [] -> discountCurve

For each swap we build a semi-annual schedule from spot to swap maturity using the schedule function, which recursively builds a sequence of dates six months apart:

let rec schedule frequency period =

seq {

yield period.startDate

let next = frequency period.startDate

if (next <= period.endDate) then

yield! schedule frequency { startDate = next; endDate = period.endDate }

}

let semiAnnual (from:Date) = from.AddMonths(6)

In Part 4, we’ll dive into to (relatively simple) math functions that underpin the bootstrapping calculations.

No State Machines in Windows Workflow 4.0?

After learning that Workflow Foundation 4.0 is likely to be missing StateMachineActivity, I think I’m going to hold off on any more research into lifecycle-of-OTC-derivative-as-Workflow, until 4.0 is released. Which is a shame, because in 3.5 I actually liked what I saw so far and was formulating some interesting posts on this.

Convert Word Documents to PDF with an MSBuild Task

I needed to convert a lot of Microsoft Word documents to PDF format, as one step in a continuous integration build of a Visual Studio project. To save a lot of build time, an incremental build of this project needs to only spend time converting the Word documents that actually changed since the last build. Rather than write a Powershell script, or other such out-of-band mechanism, a simple custom MSBuild task does the trick nicely.

using System;

using System.Collections.Generic;

using System.IO;

using Microsoft.Build.Framework;

using Microsoft.Office.Interop.Word;

namespace MSBuild.Tasks {

public class Doc2Pdf : Microsoft.Build.Utilities.Task {

private static object missing = Type.Missing;

[Required]

public ITaskItem[] Inputs { get; set; }

[Required]

public ITaskItem[] Outputs { get; set; }

[Output]

public ITaskItem[] ConvertedFiles { get; set; }

public override bool Execute() {

_Application word = (_Application) new Application();

_Document doc = null;

try {

List<ITaskItem> convertedFiles = new List<ITaskItem>();

for (int i = 0; i < Inputs.Length; i++ ) {

ITaskItem docFile = Inputs[i];

ITaskItem pdfFile = Outputs[i];

Log.LogMessage(MessageImportance.Normal,

string.Format(

"DOC2PDF: Converting {0} -> {1}",

docFile.ItemSpec, pdfFile.ItemSpec));

FileInfo docFileInfo = new FileInfo(docFile.ItemSpec);

if (!docFileInfo.Exists) {

throw new FileNotFoundException("No such file", docFile.ItemSpec);

}

object docFileName = docFileInfo.FullName;

FileInfo pdfFileInfo = new FileInfo(pdfFile.ItemSpec);

object pdfFileName = pdfFileInfo.FullName;

word.Visible = false;

doc = word.Documents.Open(ref docFileName,

ref missing, ref missing, ref missing, ref missing, ref missing,

ref missing, ref missing, ref missing, ref missing, ref missing,

ref missing, ref missing, ref missing, ref missing, ref missing);

object fileFormat = 17;

doc.SaveAs(ref pdfFileName, ref fileFormat,

ref missing, ref missing, ref missing, ref missing, ref missing,

ref missing, ref missing, ref missing, ref missing, ref missing,

ref missing, ref missing, ref missing, ref missing);

convertedFiles.Add(pdfFile);

}

return true;

}

catch (Exception ex) {

Log.LogErrorFromException(ex);

return false;

}

finally {

object saveChanges = false;

if (doc != null)

{

doc.Close(ref saveChanges, ref missing, ref missing);

}

word.Quit(ref saveChanges, ref missing, ref missing);

}

}

}

}

Assuming you build the above into an assembly called Doc2PdfTask.dll, and put the assembly in the MSBuild extensions directory, you can then set up your project file to do something like this:

<Project DefaultTargets="Build"

xmlns="http://schemas.microsoft.com/developer/msbuild/2003"

ToolsVersion="3.5">

<UsingTask AssemblyFile="$(MSBuildExtensionsPath)\Doc2PdfTask.dll"

TaskName="MSBuild.Tasks.Doc2Pdf" />

<Target Name="Build"

DependsOnTargets="Convert" />

<Target Name="Convert"

Inputs="@(WordDocuments)"

Outputs="@(WordDocuments->'%(RelativeDir)%(FileName).pdf')">

<Doc2Pdf Inputs="%(WordDocuments.Identity)"

Outputs="%(RelativeDir)%(FileName).pdf">

<Output TaskParameter="ConvertedFiles"

ItemName="PdfDocuments" />

</Doc2Pdf>

</Target>

<ItemGroup>

<WordDocuments Include="**\*.doc" />

<WordDocuments Include="**\*.docx" />

</ItemGroup>

</Project>

All Word documents under the project directory (recursively), will be converted if necessary. Obviously, you’ll need Microsoft Word installed for this to work (I have Office 2007).

Project Vector? You mean like “attack” vector?

It’s been a few years since I’ve done any appreciable amount of work in Java, and I’m sure JavaFX is as secure a technology as any other mass-code-deployment-scheme out there, but I had something of a visceral reaction to a post I ran across on Jonathan Schwartz’s blog regarding the upcoming Java Store (codename “Project Vector”):

“Remember, when apps are distributed through the Java Store, they're distributed directly to the desktop - JavaFX enables developers, businesses and content owners to bypass potentially hostile browsers.”

Now, I happen to agree with Schwartz when it comes to being able to deliver a much better user experience with a smartly-deployed desktop application over a typical AJAX web application, and real “desktop” applications are surely a lot easier to maintain, debug, test and assure the quality thereof (in my humble opinion), but something about this statement makes me nervous. Rich Internet Applications, whatever your development platform of choice, still rely on a platform, and the browser is still just a platform in this respect.

As far as I’m concerned, the Browser/JVM/CLR had better be hostile to any code that could potentially compromise the security of my customer’s client machines, otherwise I’m not going to be able to sell them software, because they won’t trust it.

And while we’re on the subject, I hope that the Java Store won’t bug me constantly to download the latest New Shiny Thing – my system tray is already lit up like a Christmas tree, thanks very much.

Discount/Zero Curve Construction in F# – Part 2 (Rolling Dates)

In part 1, we used the rollBy function to find the spot date from the curve date:

let spotDate = rollBy 2 RollRule.Following USD curveDate

Unsurprisingly, this rolls by two business days; according to the following roll rule; accounting for business holidays in the United States; the curve date. I guess there are several ways to implement this function – recursively is one way:

let rec rollBy n rule calendar (date:Date) =

match n with

| 0 -> date

| x ->

match rule with

| RollRule.Actual -> date.AddDays(float x)

| RollRule.Following -> dayAfter date

|> roll rule calendar

|> rollBy (x - 1) rule calendar

| _ -> failwith "Invalid RollRule"

let dayAfter (date:Date) = date.AddDays(1.0)

let rec roll rule calendar date =

if isBusinessDay date calendar then

date

else

match rule with

| RollRule.Actual -> date

| RollRule.Following -> dayAfter date |> roll rule calendar

| _ -> failwith "Invalid RollRule"

let isBusinessDay (date:Date) calendar =

not (calendar.weekendDays.Contains date.DayOfWeek || calendar.holidays.Contains date)

And to save space I’ll use a US holiday calendar for only 2009:

let USD = { weekendDays = Set [ System.DayOfWeek.Saturday; System.DayOfWeek.Sunday ];

holidays = Set [ date "2009-01-01";

date "2009-01-19";

date "2009-02-16";

date "2009-05-25";

date "2009-07-03";

date "2009-09-07";

date "2009-10-12";

date "2009-11-11";

date "2009-11-26";

date "2009-12-25" ] }

Discount Dates

let spotPoints = quotes

|> List.choose (fun (t, q) ->

match t with

| Overnight _ -> Some (rollBy 1 RollRule.Following USD curveDate, q)

| TomorrowNext _ -> Some (rollBy 2 RollRule.Following USD curveDate, q)

| _ -> None)

|> List.sortBy (fun (d, _) -> d)

Cash quotes get similar treatment, except that now, instead of rolling from the curve date, we’re offsetting from the spot date (and rolling if necessary):

let cashPoints = quotes

|> List.choose (fun (t, q) ->

match t with

| Cash c -> Some (offset c spotDate |> roll RollRule.Following USD, q)

| _ -> None)

|> List.sortBy (fun (d, _) -> d)

Where offset simply adds the relevant number of days, months and/or years to a date:

let offset tenor (date:Date) =

date.AddDays(float tenor.days)

.AddMonths(tenor.months)

.AddYears(tenor.years)

Swaps – same story:

let swapPoints = quotes

|> List.choose (fun (t, q) ->

match t with

| Swap s -> Some (offset s spotDate |> roll RollRule.Following USD, q)

| _ -> None)

|> List.sortBy (fun (d, _) -> d)

Futures – not nearly so simple. The June 2009 Eurodollar contract covers the three month period starting on the 3rd Wednesday in June, and ending on the 3rd Wednesday in September, so the quotes actually correspond to discount dates represented by the next futures contract in the schedule. This means we will interpolate the discount point for June (the start of the futures schedule), and add an extra point after the last quote (the end of the futures schedule):

let (sc, _) = List.hd futuresQuotes

let (ec, _) = futuresQuotes.[futuresQuotes.Length - 1]

let futuresStartDate = findNthWeekDay 3 System.DayOfWeek.Wednesday sc

|> roll RollRule.ModifiedFollowing USD

let futuresEndDate = (new Date(ec.Year, ec.Month, 1)).AddMonths(3)

// "invent" an additional contract to capture the end of the futures schedule

let endContract = (futuresEndDate, 0.0)

let futuresPoints = Seq.append futuresQuotes [endContract]

|> Seq.pairwise

|> Seq.map (fun ((_, q1), (c2, _)) ->

(findNthWeekDay 3 System.DayOfWeek.Wednesday c2

|> roll RollRule.ModifiedFollowing USD, (100.0 - q1) / 100.0))

|> Seq.to_list

Discount/Zero Curve Construction in F# – Part 1 (The End)

F# seems to read better from right to left and from bottom to top, so we’ll start with what might be the final step in building an interest rate discount curve, and work back from there:

let curve = [ (curveDate, 1.0) ]

|> bootstrap spotPoints

|> bootstrapCash spotDate cashPoints

|> bootstrapFutures futuresStartDate futuresPoints

|> bootstrapSwaps spotDate USD swapPoints

|> Seq.sortBy (fun (d, _) -> d)

What goes in?

Let’s look at what feeds the process. The curve date would typically be today’s date:

let curveDate = Date.Today

let spotDate = rollBy 2 RollRule.Following USD curveDate

A collection of quotes would be something like the list below. For illustration purposes we’ll use cash deposits out to three months, a small range of futures and swaps for the rest of the curve out to thirty years:

let quotes = [ (Overnight, 0.045);

(TomorrowNext, 0.045);

(Cash (tenor "1W"), 0.0462);

(Cash (tenor "2W"), 0.0464);

(Cash (tenor "3W"), 0.0465);

(Cash (tenor "1M"), 0.0467);

(Cash (tenor "3M"), 0.0493);

(Futures (contract "Jun2009"), 95.150);

(Futures (contract "Sep2009"), 95.595);

(Futures (contract "Dec2009"), 95.795);

(Futures (contract "Mar2010"), 95.900);

(Futures (contract "Jun2010"), 95.910);

(Swap (tenor "2Y"), 0.04404);

(Swap (tenor "3Y"), 0.04474);

(Swap (tenor "4Y"), 0.04580);

(Swap (tenor "5Y"), 0.04686);

(Swap (tenor "6Y"), 0.04772);

(Swap (tenor "7Y"), 0.04857);

(Swap (tenor "8Y"), 0.04924);

(Swap (tenor "9Y"), 0.04983);

(Swap (tenor "10Y"), 0.0504);

(Swap (tenor "12Y"), 0.05119);

(Swap (tenor "15Y"), 0.05201);

(Swap (tenor "20Y"), 0.05276);

(Swap (tenor "25Y"), 0.05294);

(Swap (tenor "30Y"), 0.05306) ]

All of these quotes are tuples, with a second value of type float, representing the market quote itself. The type of the first value in the tuple we make a discriminated union, to distinguish the different types of instrument:

type QuoteType = | Overnight // the overnight rate (one day period) | TomorrowNext // the one day period starting "tomorrow" | Cash of Tenor // cash deposit period in days, weeks, months | Futures of FuturesContract // year and month of futures contract expiry | Swap of Tenor // swap period in years

type Date = System.DateTime

type FuturesContract = Date

type Tenor = { years:int; months:int; days:int }

let date d = System.DateTime.Parse(d)

let contract d = date d

let tenor t =

let regex s = new Regex(s)

let pattern = regex ("(?<weeks>[0-9]+)W" +

"|(?<years>[0-9]+)Y(?<months>[0-9]+)M(?<days>[0-9]+)D" +

"|(?<years>[0-9]+)Y(?<months>[0-9]+)M" +

"|(?<months>[0-9]+)M(?<days>[0-9]+)D" +

"|(?<years>[0-9]+)Y" +

"|(?<months>[0-9]+)M" +

"|(?<days>[0-9]+)D")

let m = pattern.Match(t)

if m.Success then

{ new Tenor with

years = (if m.Groups.["years"].Success then int m.Groups.["years"].Value else 0) and

months = (if m.Groups.["months"].Success then int m.Groups.["months"].Value else 0) and

days = (if m.Groups.["days"].Success then int m.Groups.["days"].Value

else if m.Groups.["weeks"].Success then int m.Groups.["weeks"].Value * 7

else 0) }

else

failwith "Invalid tenor format. Valid formats include 1Y 3M 7D 2W 1Y6M, etc"

To Begin With…

A few weeks ago at the Wintellect Devscovery conference in New York I was struck by a trio of coincidental insights. The first was from Scott Hanselman’s keynote, during which he suggested that there’s really very little reason why every developer shouldn’t have a personal blog. The second came from John Robbins’ session on debugging, where he reminded us that paper, pencil and our own brains are the best debugging tools we have.

I’ve gone through several methods of “thought management” over the years, ranging from the old Black n’ Red notebook to Microsoft OneNote. A while back one company I worked for required engineers (programming was engineering back then) to maintain a daily log of work and notes in an A4 Black n’ Red, and those books were ultimately the property of the company – how they expected to be able to assimilate or search that information I have no idea.

I like OneNote a lot, but it suffers slightly from the classic “fat client” problem, in that OneNote itself is an installed application that works primarily with local notebook files. It’s possible to put OneNote notebooks on a network share or a Live Mesh folder for sharing outside your LAN, but of course even then you need to have OneNote and/or Live Mesh installed on every machine from which you plan to access your notes. No kiosks need apply. And if you’d like to publish some of your more lucid thoughts…

So now, since I have some personal projects gathering source control dust, I’m going to try a personal blog for my notes. It’ll mean I’ll need to write up my notes a lot more coherently than I usually do, but as Robbins suggested for my third insightful reminder from that conference, oftentimes you solve a particularly gnarly problem after the first ten seconds of trying to explain it to someone else.